At 15c/million tokens, will GPT 4o Mini be the foundation of Agentic Workflows?

Just 2 hours ago, OpenAI announced GPT 4o Mini. A mouthful, yes. But it’s pricing — mouthwatering. 33x cheaper than 4o, with a performance difference that won’t matter in most cases.

As the founder and CTO of an audio generation company, adorno.ai, it’s my job to understand AI’s latest promises and actual potential. I will try my best not to sound like an OpenAI promoter here. I actually dislike the company’s principles in general. But this price/performance ratio is bonkers.

Okay, so it’s cheap, but is it any good? The answer is, yes. Yes indeed.

- Mini consistently beats other small LLMs (with a single shortcoming in MathVista) in reasoning tasks, math, coding and multimodal reasoning.

- It often achieves scores close to regular 4o. See MATH, HumanEval and MMLU.

All of this while supposedly being close in size to LLaMa 8B. I doubt this actually means the model has only anywhere close to 8B parameters. Likely they use high-quality sparse parameters, quantization, pruning and that sexy new tokenizer from 4o.

So, with LLMs being cheaper than ever and guaranteed high-quality output, what are the implications for building an app in 2024?

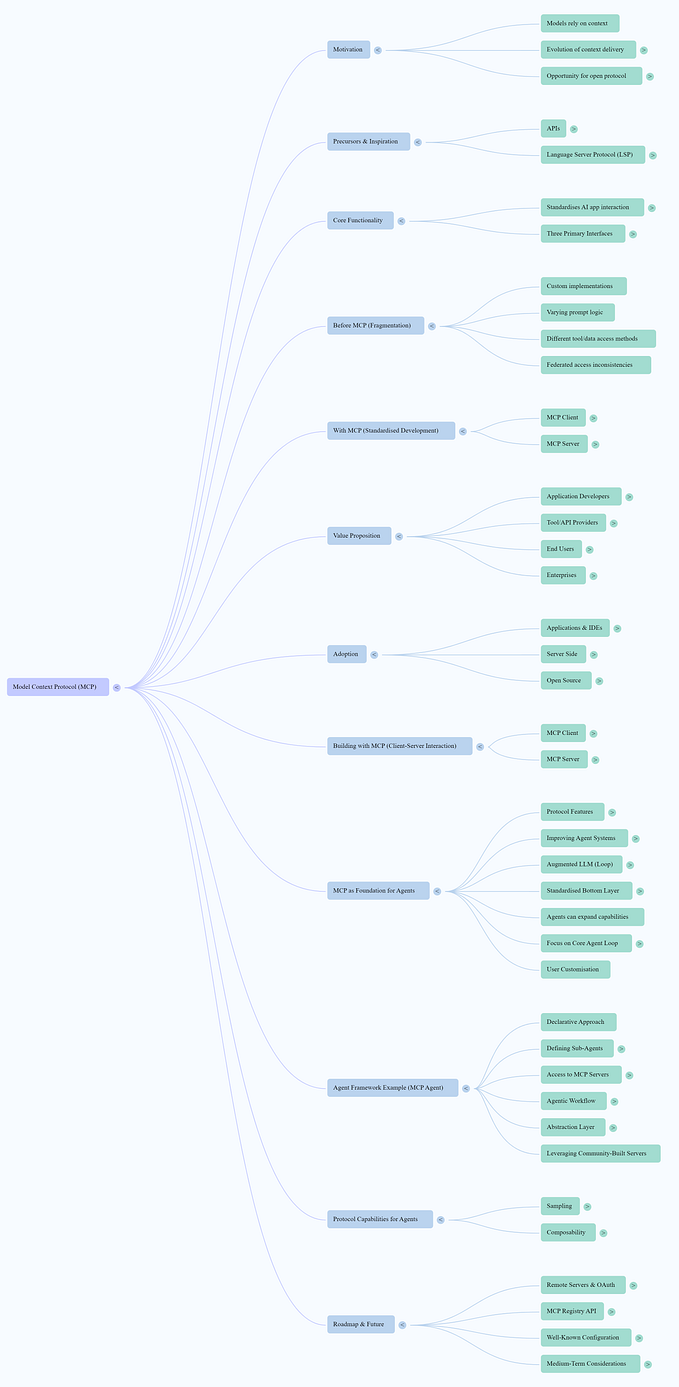

I could list loads, but the most interesting one for me is agentic workflows. Agentic workflows don’t expect an LLM to provide you an answer straight away. Instead, generating an answer becomes a process with small steps that are hard for an LLM to mess up.

Say you want some code written:

- The first “agent” writes the code

- The agent is then asked to quality check and possibly improve or fix the code

- Another agent writes a unit test

- Yet another agent has access to a runtime environment and tests the generated code against the function

- If the code fails, we head back to step 2, but now with an error log

There’s been a huge rise in papers on LLM-based agents in the two years, showing obvious benefits in output quality and complexity of the task that can be handled. There are 2 obvious problems:

- Latency: because agents are talking to each other — usually sequentially — output generation will take longer.

- Cost: much more tokens are being spent on feeding one output to another input. Over and over.

But these are exactly the things GPT 4o Mini concerns itself with. It’s the cheapest LLM available to date, but punches way above its weight class. I’m actually incorporating it right now into some preprocessing workflows we have at adorno.ai.